TKG Shared AVI SE Group using Antrea NodePortLocal

TKG Shared AVI SE Group using Antrea NodePortLocal

Introduction

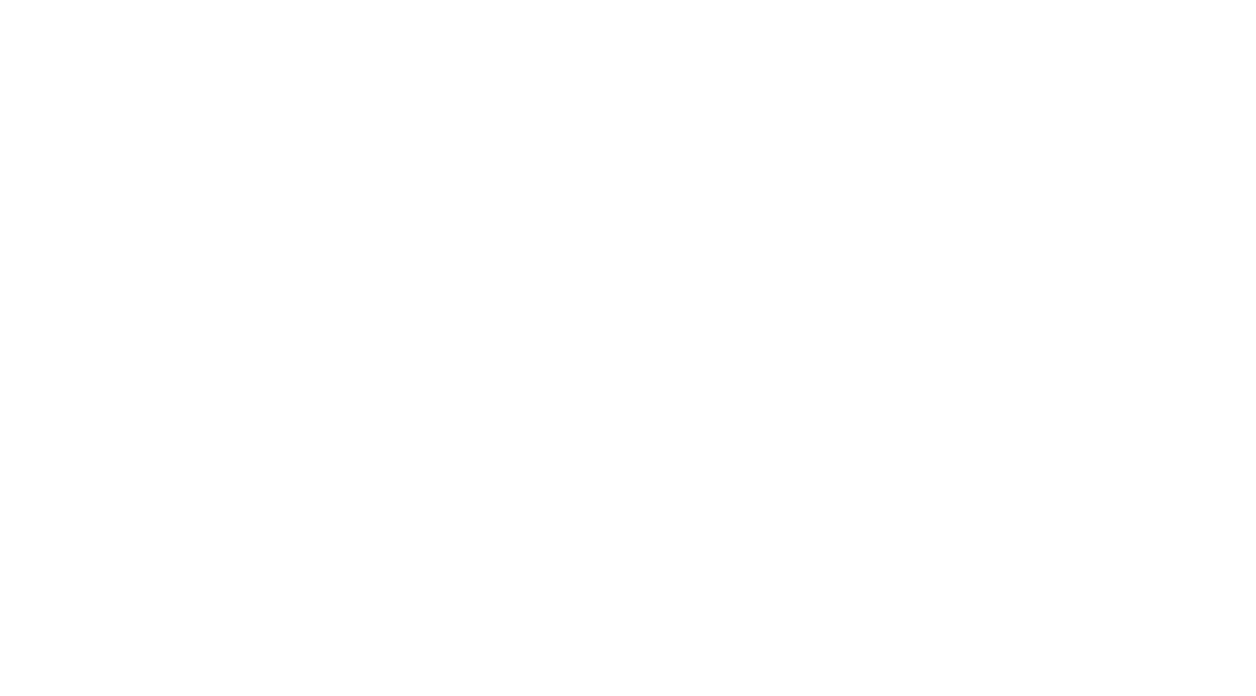

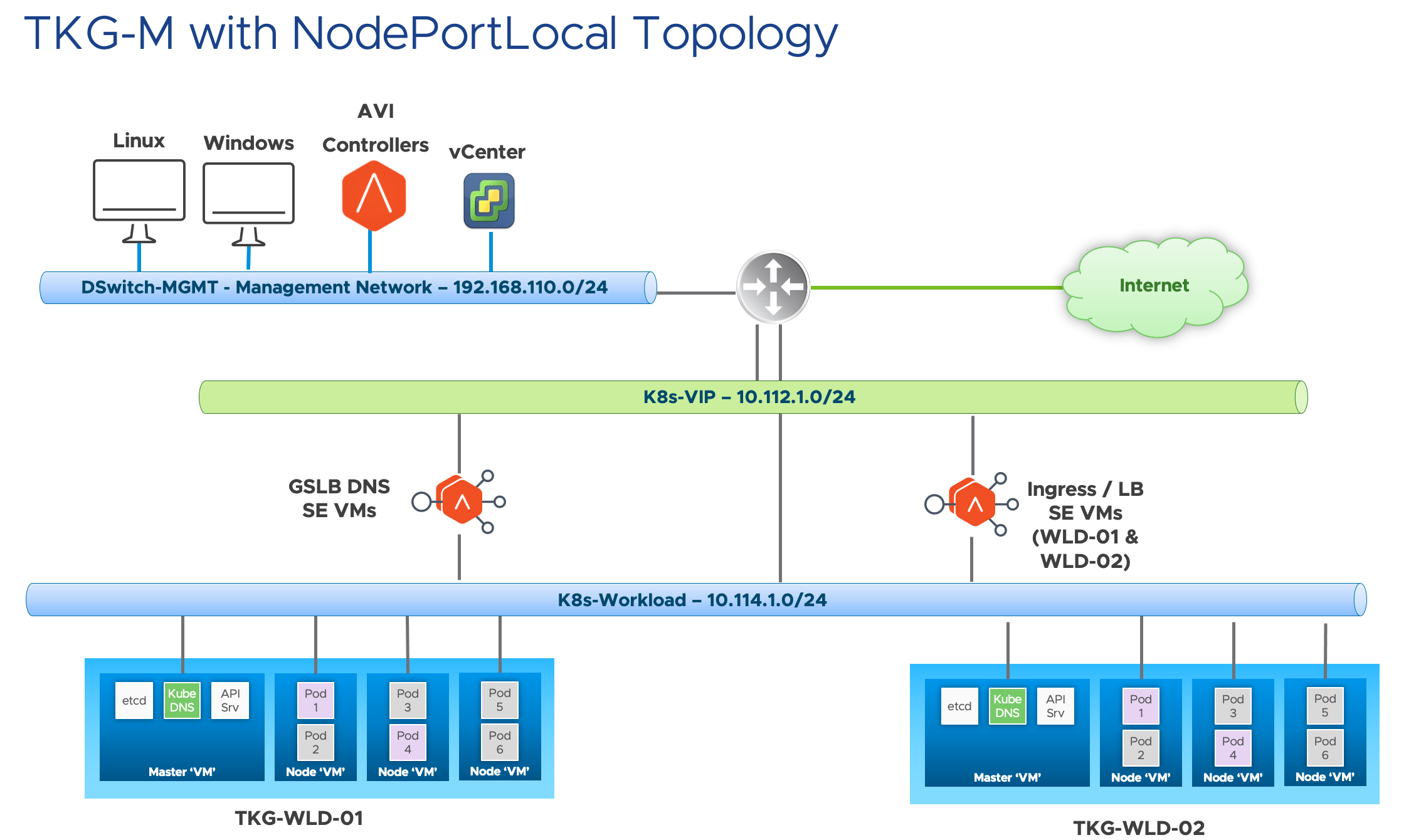

If you have been following my blogs on TKG with AKO or have been testing AKO, you will soon realize you will need multiple AVI SE Groups, one AVI SE Group per Kubernetes Cluster. So with TKG and powered by ClusterAPI, you can create Kuberetes cluster really easily, and thus if you want to use AKO, you will quickly found out that you will need to create multiple AVI SE Groups.

Certain customers and also colleagues have brought this issue up and especially with smaller environments or clusters with little requirements on the throughput/transactions on the Ingress or LB, this 1:1 mapping of Kubernetes cluster and AVI SE Group which causes alot of AVI SE Groups being created becomes a manageability problem.

Technical Limitation

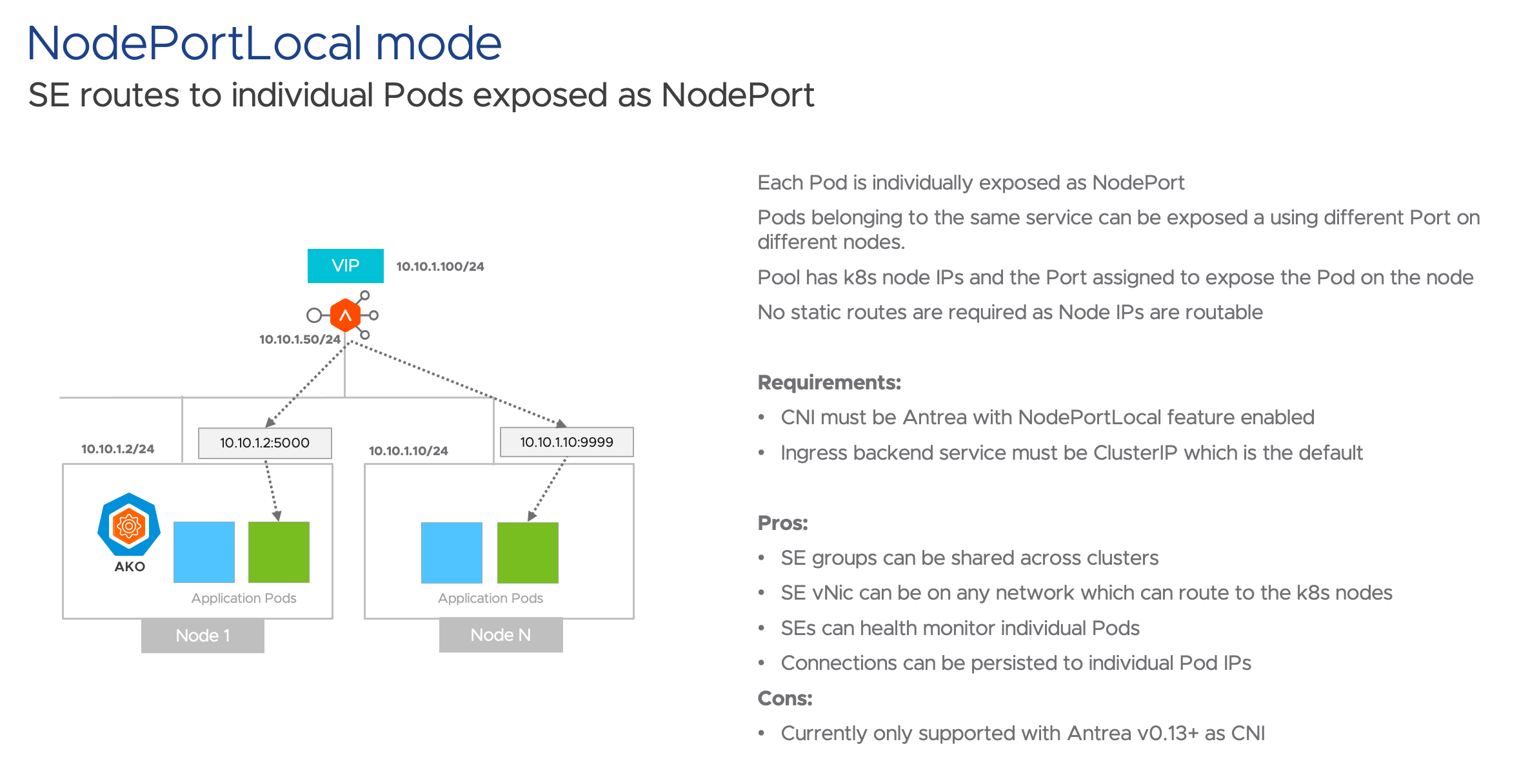

In AVI, there are a few modes which you can utilize.

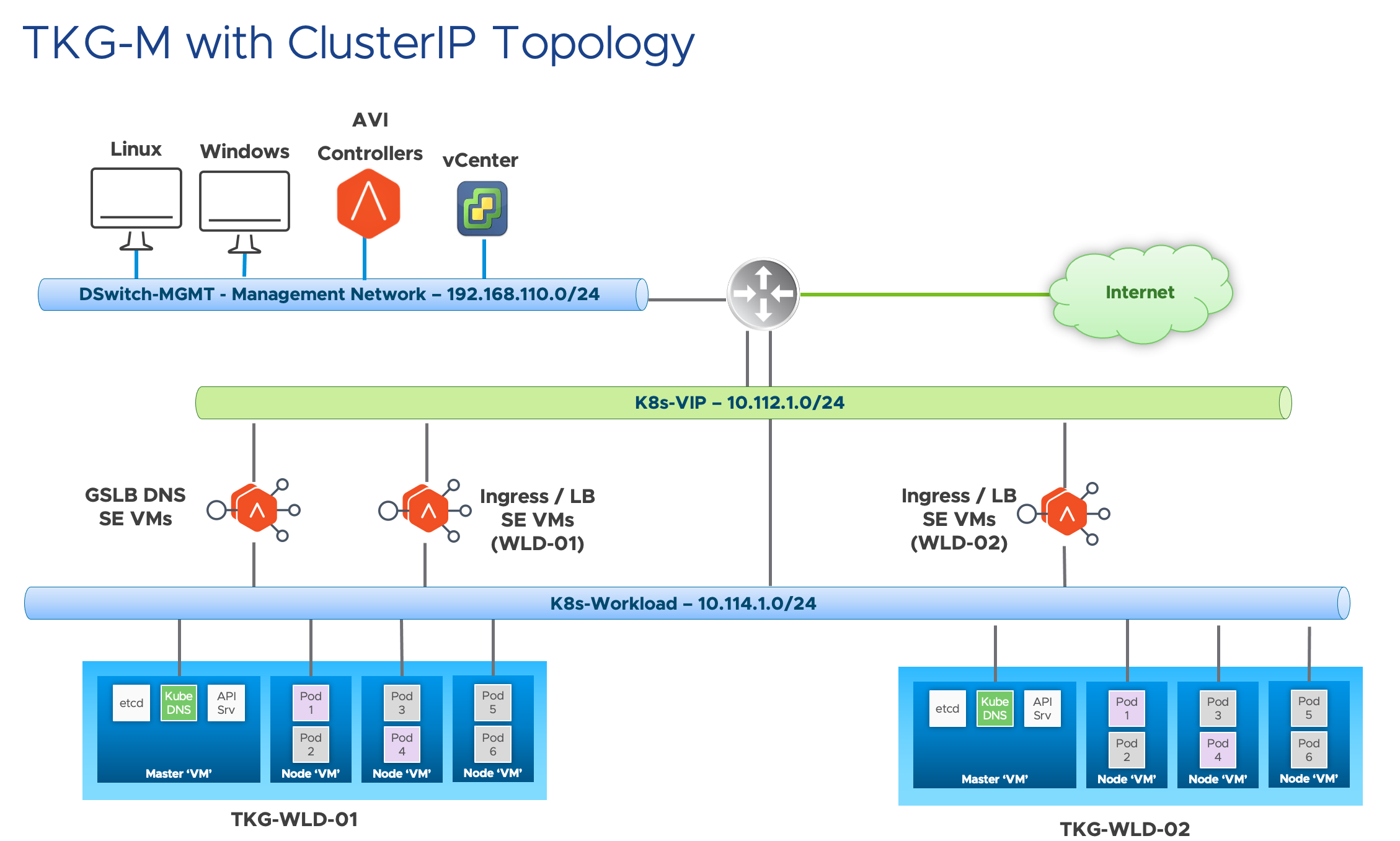

Mode 1: ClusterIP mode

ClusterIP mode provides direct connectivity to the K8s pods, which means you need connect the AVI SE interface to the worker nodes network. However, there is a restriction of a SE group per cluster which means you will need more resources and AVI licenses.

ClusterIP mode provides direct connectivity to the K8s pods, which means you need connect the AVI SE interface to the worker nodes network. However, there is a restriction of a SE group per cluster which means you will need more resources and AVI licenses.

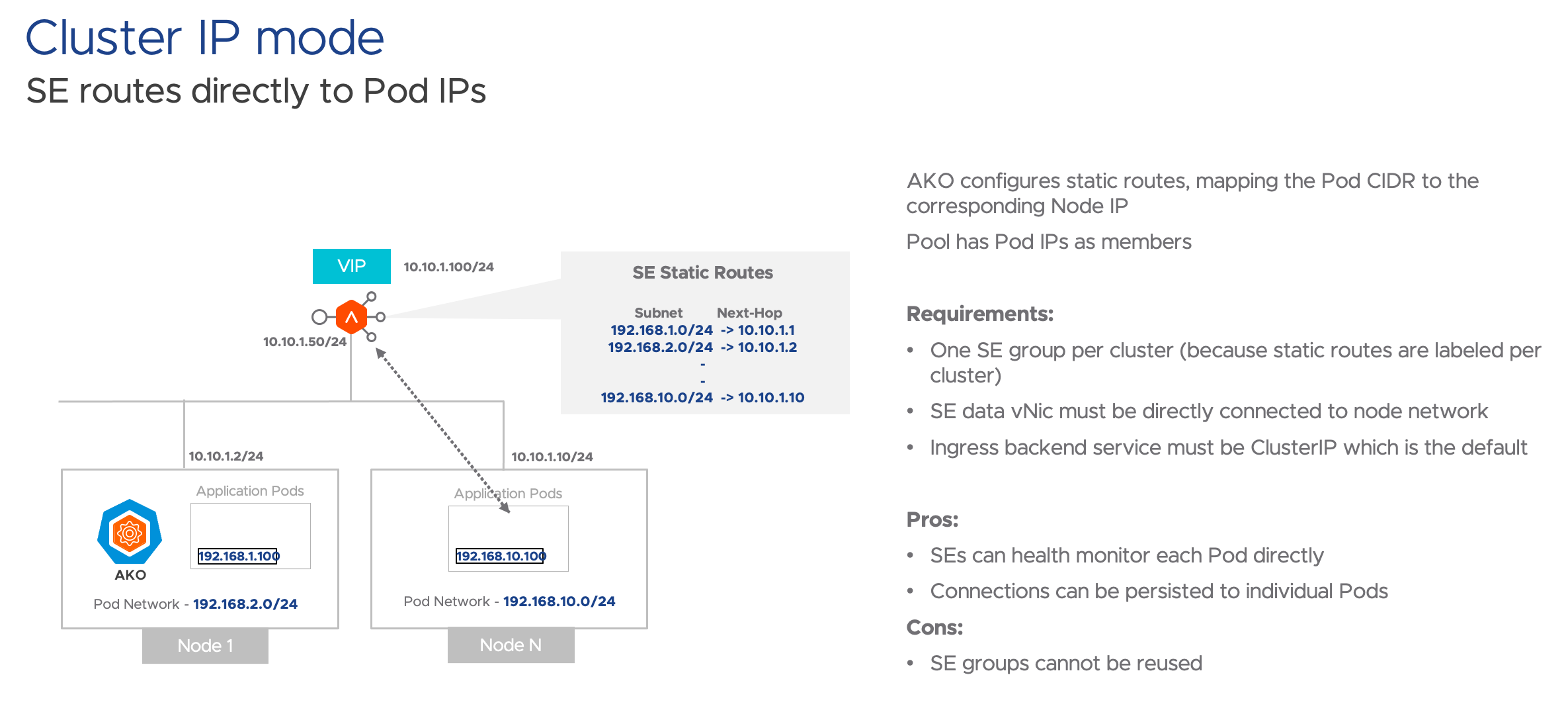

Node 2: NodePort mode

NodePort resolves the issue for needing a SE group per TKG cluster, but what happens under the hood is that a kube-proxy is created on each and every workload node even if the pod does not exist in it, and there is no need for direct connectivity. The user also needs to change their manifests to use NodePort which sometimes can be an inconvenience.

NodePort resolves the issue for needing a SE group per TKG cluster, but what happens under the hood is that a kube-proxy is created on each and every workload node even if the pod does not exist in it, and there is no need for direct connectivity. The user also needs to change their manifests to use NodePort which sometimes can be an inconvenience.

Solution

Mode 3: NodePortLocal

NodePortLocal is the best of both worlds, still use ClusterIP in your manifest, but rather than kube-proxy being utilised, there is a single iptables entry per pod which means direct connectivity to each pod for monitoring. NodePortLocal also resolves the issue for needing a SE group per TKG cluster.

NodePortLocal is the best of both worlds, still use ClusterIP in your manifest, but rather than kube-proxy being utilised, there is a single iptables entry per pod which means direct connectivity to each pod for monitoring. NodePortLocal also resolves the issue for needing a SE group per TKG cluster.

Software Versions Used

| Software | Versions |

|---|---|

| TKG-m | 1.4 |

| AKO | 1.5.2 |

| AMKO | 1.5.2 |

| AVI Controller | 20.1.6 |

| Antrea | 0.13.3-7ad64b3 |

Screenshots

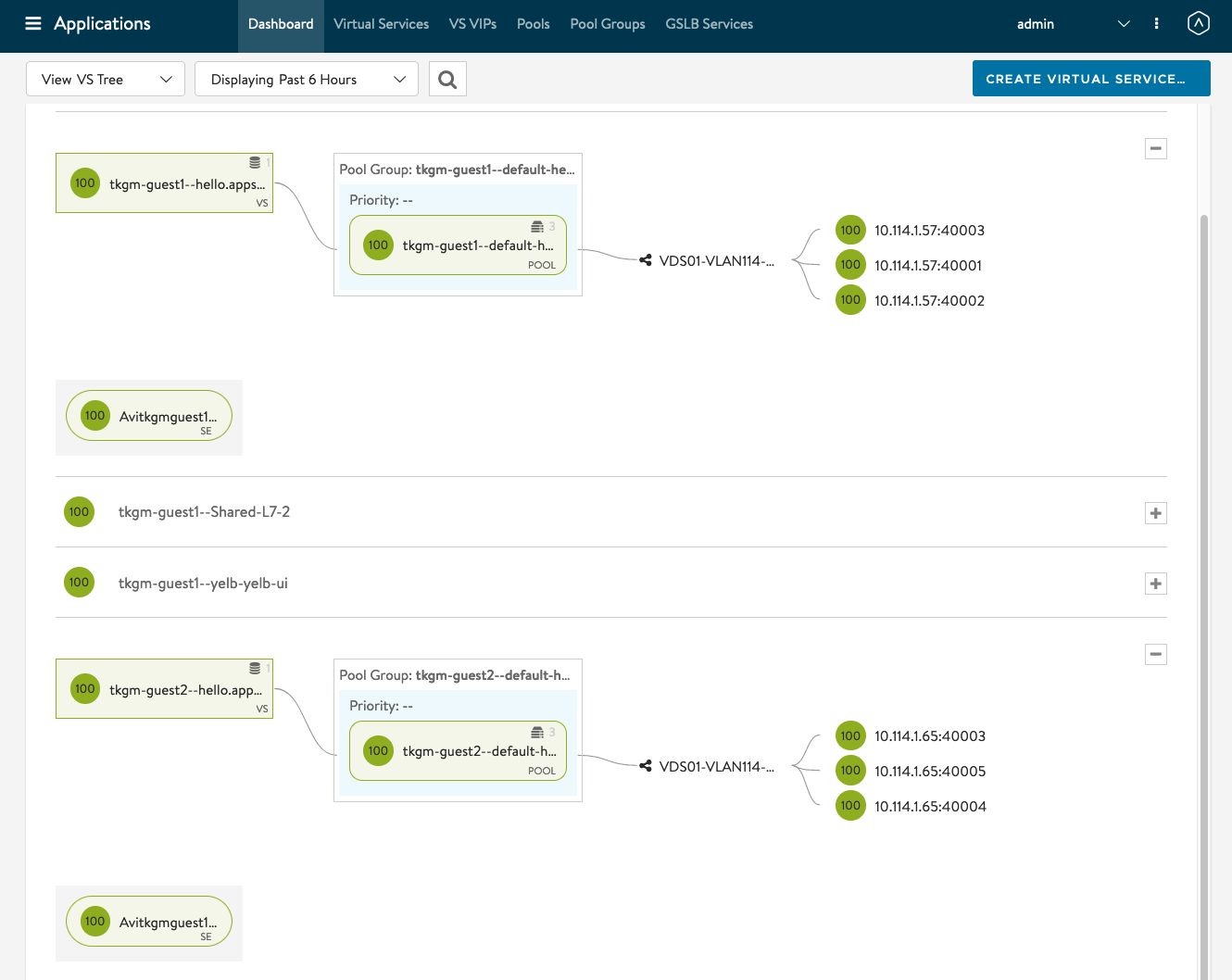

Application Dashboard View. We can see that I have 2 TKG clusters, and the worker nodes are on the same subnet but different IP address. You can also see the NodePortLocal in action where you can see the different NodePorts being used.

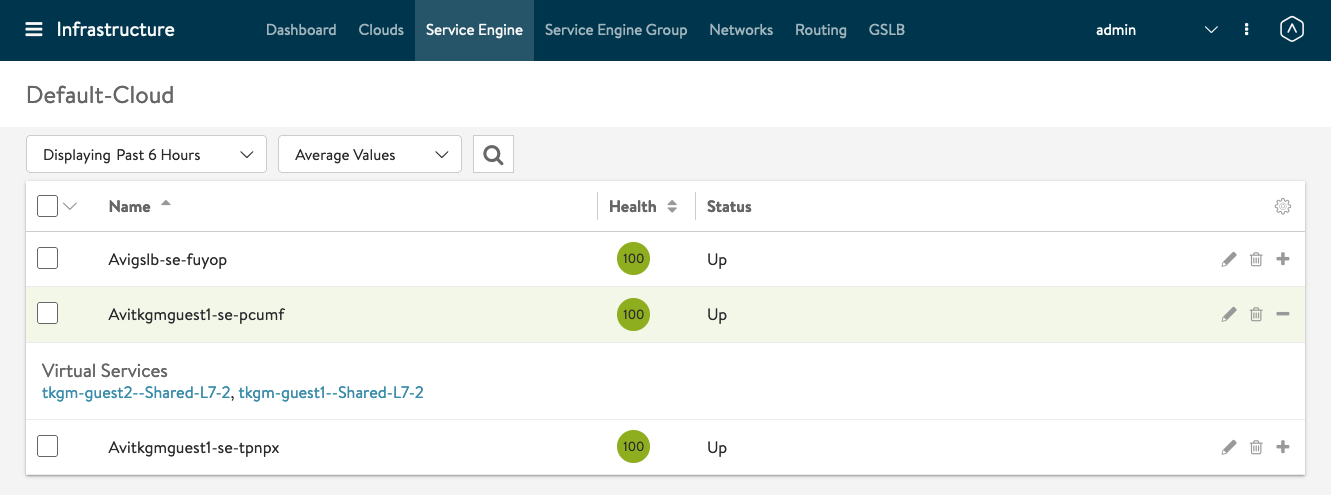

Infrastructure SE View

You can see that the Ingress Virtual Services for tkgm-guest1 and tkgm-guest2 are on the same Service Engine.

[root@localhost ~]# k get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

hello-kubernetes-594948df-5hg52 1/1 Running 0 12d 100.96.1.21 tkgm-guest1-md-0-57b86cd777-bmzsf <none> <none>

hello-kubernetes-594948df-6tfxn 1/1 Running 0 12d 100.96.1.22 tkgm-guest1-md-0-57b86cd777-bmzsf <none> <none>

hello-kubernetes-594948df-sl54n 1/1 Running 0 12d 100.96.1.20 tkgm-guest1-md-0-57b86cd777-bmzsf <none> <none>

[root@localhost ~]# k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-kubernetes ClusterIP 100.66.53.34 <none> 80/TCP 12d

kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 12d

[root@localhost ~]# k get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tkgm-guest1-control-plane-dn8z5 Ready control-plane,master 12d v1.21.2+vmware.1 10.114.1.56 10.114.1.56 VMware Photon OS/Linux 4.19.198-1.ph3 containerd://1.4.6

tkgm-guest1-md-0-57b86cd777-58tpl Ready <none> 12d v1.21.2+vmware.1 10.114.1.59 10.114.1.59 VMware Photon OS/Linux 4.19.198-1.ph3 containerd://1.4.6

tkgm-guest1-md-0-57b86cd777-bmzsf Ready <none> 12d v1.21.2+vmware.1 10.114.1.57 10.114.1.57 VMware Photon OS/Linux 4.19.198-1.ph3 containerd://1.4.6

[root@localhost ~]#

[root@localhost ~]# k get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

hello-kubernetes-594948df-lfpcg 1/1 Running 0 12d 100.96.2.8 tkgm-guest2-md-0-6545677fcb-thz7k <none> <none>

hello-kubernetes-594948df-wnkkm 1/1 Running 0 12d 100.96.2.10 tkgm-guest2-md-0-6545677fcb-thz7k <none> <none>

hello-kubernetes-594948df-x6llw 1/1 Running 0 12d 100.96.2.9 tkgm-guest2-md-0-6545677fcb-thz7k <none> <none>

[root@localhost ~]# k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-kubernetes ClusterIP 100.66.141.137 <none> 80/TCP 12d

kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 12d

[root@localhost ~]# k get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tkgm-guest2-control-plane-fmj7r Ready control-plane,master 12d v1.21.2+vmware.1 10.114.1.16 10.114.1.16 VMware Photon OS/Linux 4.19.198-1.ph3 containerd://1.4.6

tkgm-guest2-md-0-6545677fcb-thz7k Ready <none> 12d v1.21.2+vmware.1 10.114.1.65 10.114.1.65 VMware Photon OS/Linux 4.19.198-1.ph3 containerd://1.4.6

tkgm-guest2-md-0-6545677fcb-wp8cg Ready <none> 12d v1.21.2+vmware.1 10.114.1.17 10.114.1.17 VMware Photon OS/Linux 4.19.198-1.ph3 containerd://1.4.6

[root@localhost ~]#

TKG-m Configuration for NodePortLocal

There is nothing particular to be configured on the TKG-m side. with TKG-m 1.4, Antrea is the default CNI and the Antrea version that comes with it which is 0.13.x already support NodePortLocal.

You can check whether NodePortLocal is enabled by checking the configmap.

[root@localhost ~]# k get configmap antrea-config-ctb8mftc58 -n kube-system -oyaml

apiVersion: v1

data:

antrea-agent.conf: |

featureGates:

AntreaProxy: true

EndpointSlice: false

Traceflow: true

NodePortLocal: true

AntreaPolicy: true

FlowExporter: false

NetworkPolicyStats: false

trafficEncapMode: encap

noSNAT: false

serviceCIDR: 100.64.0.0/13

tlsCipherSuites: TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_256_GCM_SHA384

Reference: https://antrea.io/docs/main/docs/node-port-local/

How to configure AKO to use NodePortLocal?

You can refer to my TKGm 1.4 AMKO Install repo for the sample values.yaml and install scripts for TKG-m, AKO and AMKO. https://github.com/vincenthanjs/tkgm1.4-amko-install

values.yaml for TKG-m Cluster 1 AKO Install. Things to note are the cniPlugin: antrea and the serviceEngineGroupName.

# Default values for ako.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

image:

repository: projects.registry.vmware.com/ako/ako

pullPolicy: IfNotPresent

### This section outlines the generic AKO settings

AKOSettings:

logLevel: WARN # enum: INFO|DEBUG|WARN|ERROR

fullSyncFrequency: '1800' # This frequency controls how often AKO polls the Avi controller to update itself with cloud configurations.

apiServerPort: 8080 # Internal port for AKO's API server for the liveness probe of the AKO pod default=8080

deleteConfig: 'false' # Has to be set to true in configmap if user wants to delete AKO created objects from AVI

disableStaticRouteSync: 'false' # If the POD networks are reachable from the Avi SE, set this knob to true.

clusterName: tkgm-guest1 # A unique identifier for the kubernetes cluster, that helps distinguish the objects for this cluster in the avi controller. // MUST-EDIT

cniPlugin: 'antrea' # Set the string if your CNI is calico or openshift. enum: calico|canal|flannel|openshift|antrea|ncp

enableEVH: false # This enables the Enhanced Virtual Hosting Model in Avi Controller for the Virtual Services

layer7Only: false # If this flag is switched on, then AKO will only do layer 7 loadbalancing.

# NamespaceSelector contains label key and value used for namespacemigration

# Same label has to be present on namespace/s which needs migration/sync to AKO

namespaceSelector:

labelKey: ''

labelValue: ''

servicesAPI: false # Flag that enables AKO in services API mode: https://kubernetes-sigs.github.io/service-apis/. Currently implemented only for L4. This flag uses the upstream GA APIs which are not backward compatible

# with the advancedL4 APIs which uses a fork and a version of v1alpha1pre1

### This section outlines the network settings for virtualservices.

NetworkSettings:

## This list of network and cidrs are used in pool placement network for vcenter cloud.

## Node Network details are not needed when in nodeport mode / static routes are disabled / non vcenter clouds.

#nodeNetworkList: []

nodeNetworkList:

- networkName: "VDS01-VLAN114-Workload"

cidrs:

- 10.114.1.0/24

enableRHI: false # This is a cluster wide setting for BGP peering.

nsxtT1LR: '' # T1 Logical Segment mapping for backend network. Only applies to NSX-T cloud.

bgpPeerLabels: [] # Select BGP peers using bgpPeerLabels, for selective VsVip advertisement.

# bgpPeerLabels:

# - peer1

# - peer2

#vipNetworkList: [] # Network information of the VIP network. Multiple networks allowed only for AWS Cloud.

vipNetworkList:

- networkName: "VDS01-VLAN112-VIP"

cidr: 10.112.1.0/24

### This section outlines all the knobs used to control Layer 7 loadbalancing settings in AKO.

L7Settings:

defaultIngController: 'true'

noPGForSNI: false # Switching this knob to true, will get rid of poolgroups from SNI VSes. Do not use this flag, if you don't want http caching. This will be deprecated once the controller support caching on PGs.

serviceType: NodePortLocal # enum NodePort|ClusterIP|NodePortLocal

shardVSSize: LARGE # Use this to control the layer 7 VS numbers. This applies to both secure/insecure VSes but does not apply for passthrough. ENUMs: LARGE, MEDIUM, SMALL, DEDICATED

passthroughShardSize: SMALL # Control the passthrough virtualservice numbers using this ENUM. ENUMs: LARGE, MEDIUM, SMALL

### This section outlines all the knobs used to control Layer 4 loadbalancing settings in AKO.

L4Settings:

advancedL4: 'false' # Use this knob to control the settings for the services API usage. Default to not using services APIs: https://github.com/kubernetes-sigs/service-apis

defaultDomain: '' # If multiple sub-domains are configured in the cloud, use this knob to set the default sub-domain to use for L4 VSes.

autoFQDN: default # ENUM: default(<svc>.<ns>.<subdomain>), flat (<svc>-<ns>.<subdomain>), "disabled" If the value is disabled then the FQDN generation is disabled.

### This section outlines settings on the Avi controller that affects AKO's functionality.

ControllerSettings:

serviceEngineGroupName: seg-tkgm-guest1 # Name of the ServiceEngine Group.

controllerVersion: 20.1.6 # The controller API version

cloudName: Default-Cloud # The configured cloud name on the Avi controller.

controllerHost: '10.115.1.52' # IP address or Hostname of Avi Controller

tenantsPerCluster: 'false' # If set to true, AKO will map each kubernetes cluster uniquely to a tenant in Avi

tenantName: admin # Name of the tenant where all the AKO objects will be created in AVI. // Required only if tenantsPerCluster is set to True

nodePortSelector: # Only applicable if serviceType is NodePort

key: ''

value: ''

resources:

limits:

cpu: 250m

memory: 300Mi

requests:

cpu: 100m

memory: 200Mi

podSecurityContext: {}

rbac:

# Creates the pod security policy if set to true

pspEnable: false

avicredentials:

username: 'admin'

password: 'VMware1!'

certificateAuthorityData:

persistentVolumeClaim: ''

mountPath: /log

logFile: avi.log

values.yaml for TKG-m Cluster 2 AKO Install. Things to note are the cniPlugin: antrea and the serviceEngineGroupName which is the same as SE group that I define for TKG-m Cluster 1.

# Default values for ako.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

image:

repository: projects.registry.vmware.com/ako/ako

pullPolicy: IfNotPresent

### This section outlines the generic AKO settings

AKOSettings:

logLevel: WARN # enum: INFO|DEBUG|WARN|ERROR

fullSyncFrequency: '1800' # This frequency controls how often AKO polls the Avi controller to update itself with cloud configurations.

apiServerPort: 8080 # Internal port for AKO's API server for the liveness probe of the AKO pod default=8080

deleteConfig: 'false' # Has to be set to true in configmap if user wants to delete AKO created objects from AVI

disableStaticRouteSync: 'false' # If the POD networks are reachable from the Avi SE, set this knob to true.

clusterName: tkgm-guest2 # A unique identifier for the kubernetes cluster, that helps distinguish the objects for this cluster in the avi controller. // MUST-EDIT

cniPlugin: 'antrea' # Set the string if your CNI is calico or openshift. enum: calico|canal|flannel|openshift|antrea|ncp

enableEVH: false # This enables the Enhanced Virtual Hosting Model in Avi Controller for the Virtual Services

layer7Only: false # If this flag is switched on, then AKO will only do layer 7 loadbalancing.

# NamespaceSelector contains label key and value used for namespacemigration

# Same label has to be present on namespace/s which needs migration/sync to AKO

namespaceSelector:

labelKey: ''

labelValue: ''

servicesAPI: false # Flag that enables AKO in services API mode: https://kubernetes-sigs.github.io/service-apis/. Currently implemented only for L4. This flag uses the upstream GA APIs which are not backward compatible

# with the advancedL4 APIs which uses a fork and a version of v1alpha1pre1

### This section outlines the network settings for virtualservices.

NetworkSettings:

## This list of network and cidrs are used in pool placement network for vcenter cloud.

## Node Network details are not needed when in nodeport mode / static routes are disabled / non vcenter clouds.

#nodeNetworkList: []

nodeNetworkList:

- networkName: "VDS01-VLAN114-Workload"

cidrs:

- 10.114.1.0/24

enableRHI: false # This is a cluster wide setting for BGP peering.

nsxtT1LR: '' # T1 Logical Segment mapping for backend network. Only applies to NSX-T cloud.

bgpPeerLabels: [] # Select BGP peers using bgpPeerLabels, for selective VsVip advertisement.

# bgpPeerLabels:

# - peer1

# - peer2

#vipNetworkList: [] # Network information of the VIP network. Multiple networks allowed only for AWS Cloud.

vipNetworkList:

- networkName: "VDS01-VLAN112-VIP"

cidr: 10.112.1.0/24

### This section outlines all the knobs used to control Layer 7 loadbalancing settings in AKO.

L7Settings:

defaultIngController: 'true'

noPGForSNI: false # Switching this knob to true, will get rid of poolgroups from SNI VSes. Do not use this flag, if you don't want http caching. This will be deprecated once the controller support caching on PGs.

serviceType: NodePortLocal # enum NodePort|ClusterIP|NodePortLocal

shardVSSize: LARGE # Use this to control the layer 7 VS numbers. This applies to both secure/insecure VSes but does not apply for passthrough. ENUMs: LARGE, MEDIUM, SMALL, DEDICATED

passthroughShardSize: SMALL # Control the passthrough virtualservice numbers using this ENUM. ENUMs: LARGE, MEDIUM, SMALL

### This section outlines all the knobs used to control Layer 4 loadbalancing settings in AKO.

L4Settings:

advancedL4: 'false' # Use this knob to control the settings for the services API usage. Default to not using services APIs: https://github.com/kubernetes-sigs/service-apis

defaultDomain: '' # If multiple sub-domains are configured in the cloud, use this knob to set the default sub-domain to use for L4 VSes.

autoFQDN: default # ENUM: default(<svc>.<ns>.<subdomain>), flat (<svc>-<ns>.<subdomain>), "disabled" If the value is disabled then the FQDN generation is disabled.

### This section outlines settings on the Avi controller that affects AKO's functionality.

ControllerSettings:

serviceEngineGroupName: seg-tkgm-guest1 # Name of the ServiceEngine Group.

controllerVersion: 20.1.6 # The controller API version

cloudName: Default-Cloud # The configured cloud name on the Avi controller.

controllerHost: '10.115.1.52' # IP address or Hostname of Avi Controller

tenantsPerCluster: 'false' # If set to true, AKO will map each kubernetes cluster uniquely to a tenant in Avi

tenantName: admin # Name of the tenant where all the AKO objects will be created in AVI. // Required only if tenantsPerCluster is set to True

nodePortSelector: # Only applicable if serviceType is NodePort

key: ''

value: ''

resources:

limits:

cpu: 250m

memory: 300Mi

requests:

cpu: 100m

memory: 200Mi

podSecurityContext: {}

rbac:

# Creates the pod security policy if set to true

pspEnable: false

avicredentials:

username: 'admin'

password: 'VMware1!'

certificateAuthorityData:

persistentVolumeClaim: ''

mountPath: /log

logFile: avi.log

Conclusion

With Antrea NodePortLocal(NPL) and AKO leveraging NPL, we can the option of sharing a single AVI SE Group for multiple TKG clusters. This helps to save the number of resources required, licensing and simplify manageability on groups of AVI SE especially where you can quickly and easily deploy multiple TKG clusters with ClusterAPI.